Concept Testing product

Role

Co-lead, Senior Product Designer, 2019

Design strategy

Workshop facilitation

Happy path and IA design

Sketching, whiteboarding, wireframing, designing, and iterating on user flows

Research, testing and designing iterations

Interaction design & specifications

Cross-functional collaboration to help facilitate hitting business targets and launching a new product

Summary of work

We created a tiger team within the SurveyMonkey company in order to strategize and build out a new product on our platform: Concept Testing modules that will allow users to leverage our experience and methodologies with the flexibility of a competitive, DIY testing product.

Status

This project has been launched and can be found here on the SurveyMonkey (now Momentive.ai) website. Here’s the product announcement if you want to check that out, as well.

“Broaden our service offerings by creating a tool that customers — from Insights Professionals to in-house design teams — can use to test and validate their concepts.”

Overview

At the time we embarked on building this product, the SurveyMonkey platform provided rich features for all users, but we were not orienting our survey tool to address specific user needs. What if we could stay horizontal to serve a large user base, but also go deep to deliver higher value to our users and business? We imagined users coming to SurveyMonkey to choose a product that is highly tailored to their specific needs and workflows, right on our core survey platform.

My role

As a senior designer on this project, I worked closely with stakeholders and cross-functional teams to define and execute this project.

Some of my contributions included:

Facilitated design workshops and collaborative sessions with cross-functional teams to drive feature definition, goals, and strategy

Planned, directed, and orchestrated multiple design initiatives throughout the project lifecycle

Managed and coached designers across teams, levels, and disciplines

Advocated and applied user-centric processes in collaboration with cross-functional teams and put users in focus through research, design and testing

Setting the context

We started with 12 use cases our strategy and research teams had generated for our consideration

We designed, proposed, and led a two-day workshop with 15+ attendees from engineering, product, design, research, strategy, marketing, and customer service. The topics we covered gave valuable insights into historical context, business rationale, company strategy, research findings, and goal alignment.

Design exploration

Participants worked in small groups to generate design hypotheses and explore solutions

User empathy

We identified key user personas and mapped user journeys. This helped the team build empathy for users, which served as a foundation for our process going forward.

Prioritization matrix

The workshop was designed to explore the use cases from different angles such asuser needs, business objectives, product scalability, etc. At the end of day 2, the team gathered all the info they learned and ranked the use cases in a prioritization matrix defined by ‘Opportunity’ and ‘Ability-to-win (Feasibility)’

Aligning perspectives

We partnered with our cross-functional counterparts to synthesize the outcomes and presented our findings and recommendations to C-level leaders. We completed another round of testing with targeted users to nail down the final use case, which we then presented company wide

Concept testing product direction

Myself and the design lead (Quan Nguyen; now working at Meta 💙) had been tasked to lead the user experience and design — in partnership with a product owner, engineering lead, and program manager — of a new product that would live under the umbrella of the core survey experience, but with a different offering to a new customer base and persona.

Market opportunity to address

Users: Business owners and product developers

Timeframe: Needs feedback in hours (instead of the typical weeks to months it takes to engage with an agency)

Budget: $1k to $30k range

Audience: Needs to target specific customer demographics in their research

Polish required: Most users need to report results to stakeholders

Key user pain points included

User testing galore

Over the course of eight months, the lead designer and I led weekly user research sessions for 16 weeks (almost) consecutively.

This allowed us to gain insights on concepts quickly and move confidently in directions that tested well with our users.

Solutioning

Targeting the correct audience

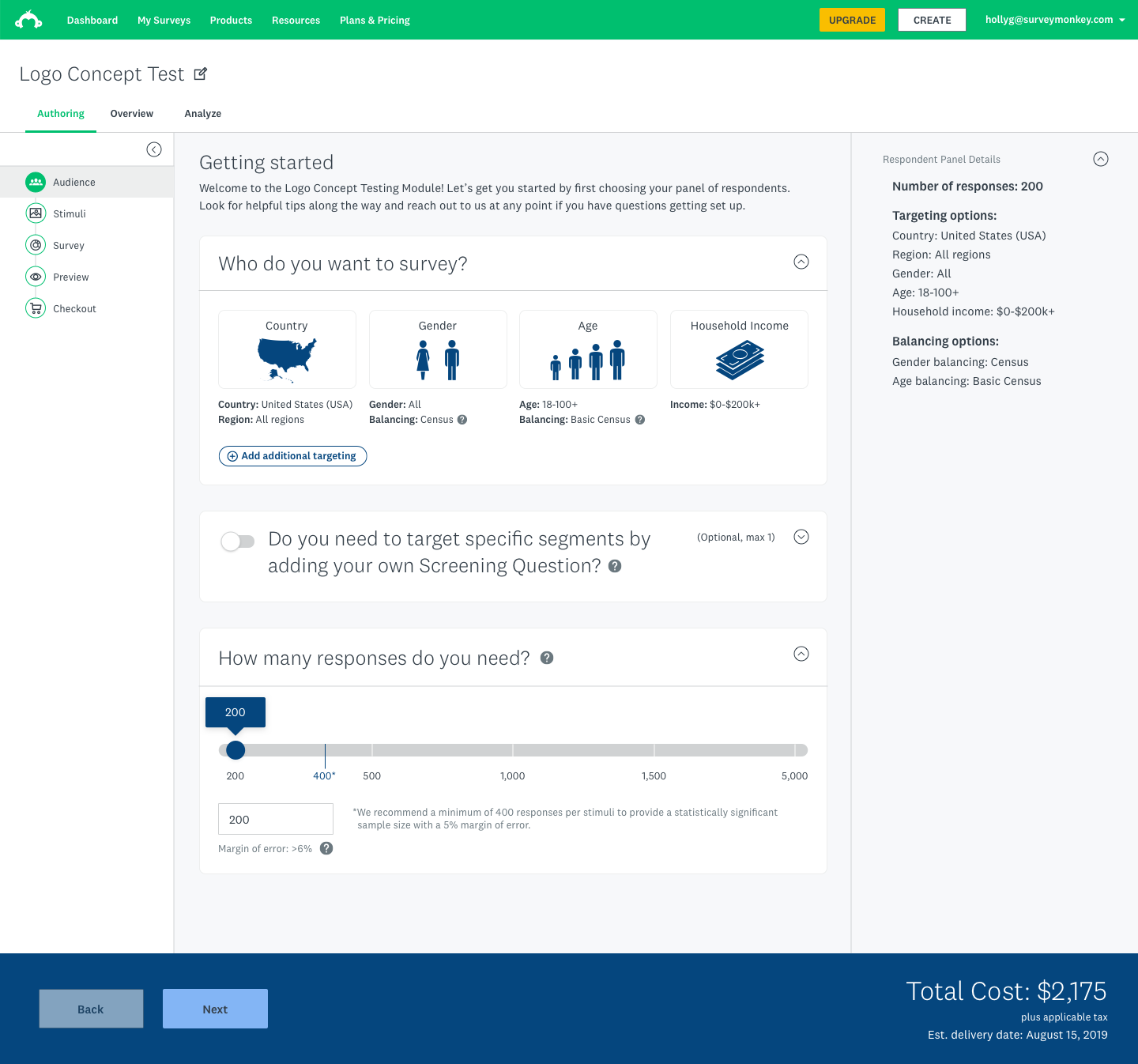

I led the design of audience selection, which utilized our unique panel of research participants across a wide range of demographics. This allowed us to target respondents based on wide-ranging but specific criteria, including multilingual and international respondents.

This portion included target demographic selection, screening questions, and setting the number responses they’ll need. The dark blue bar at the bottom keeps a running total of the project.

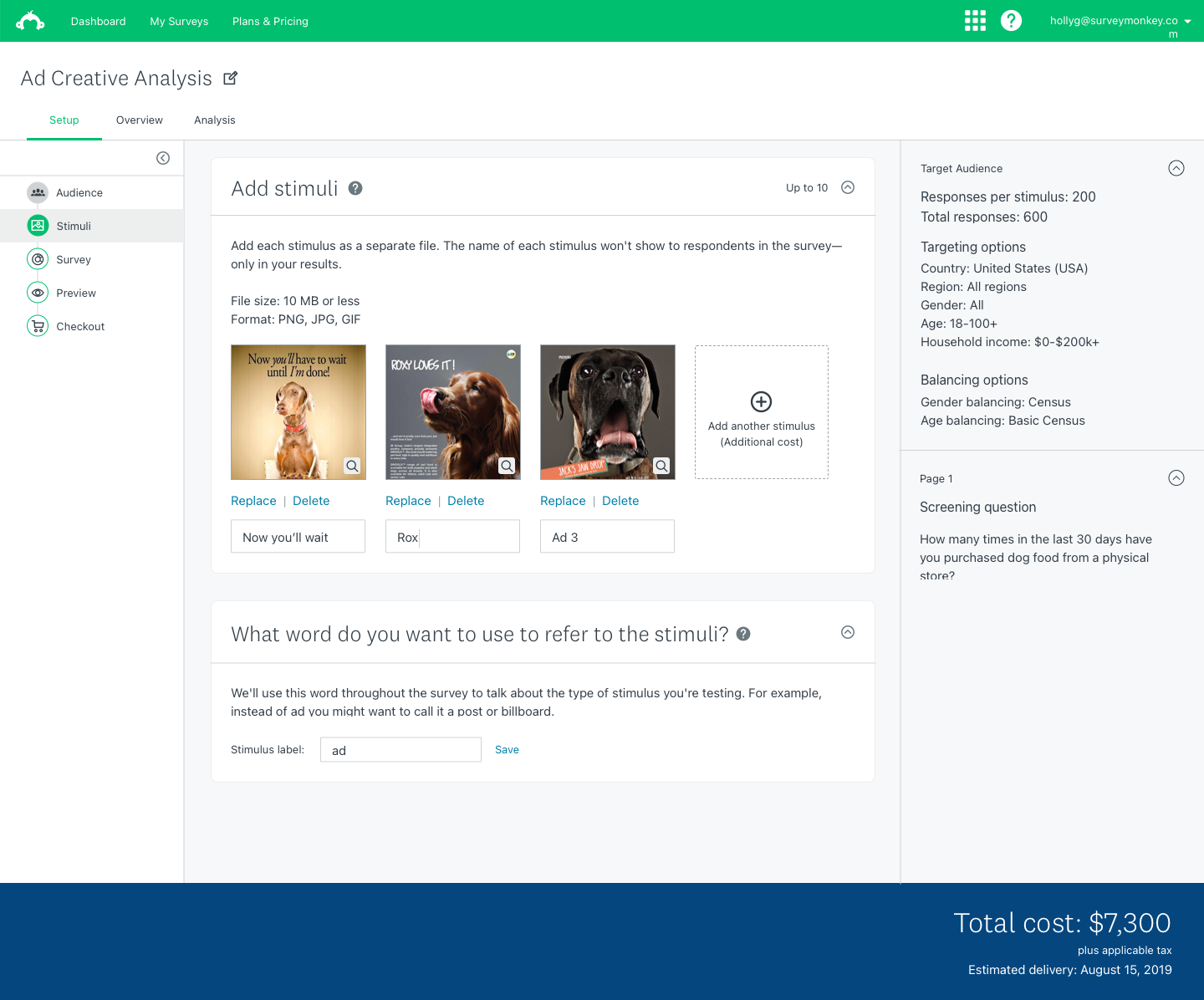

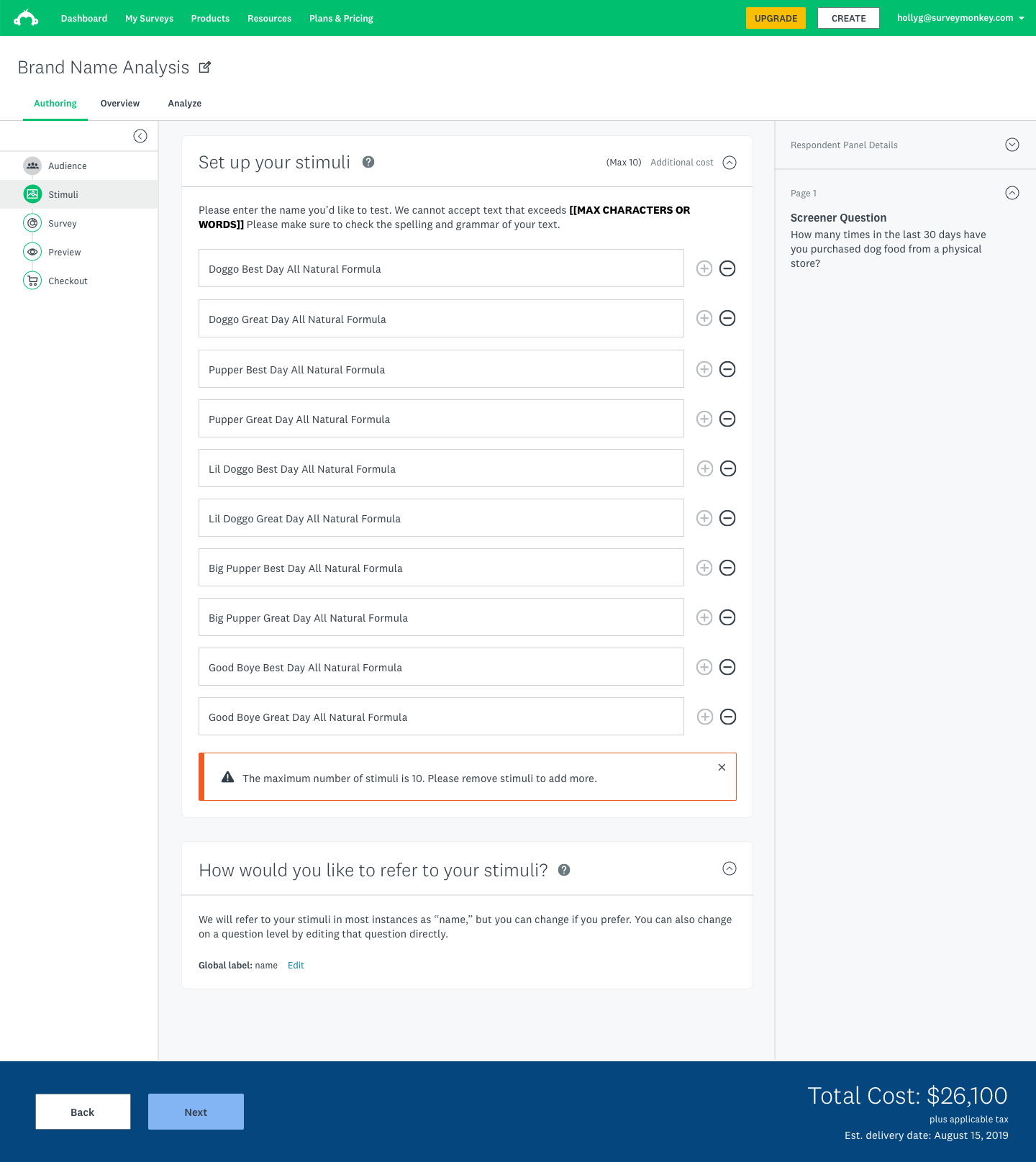

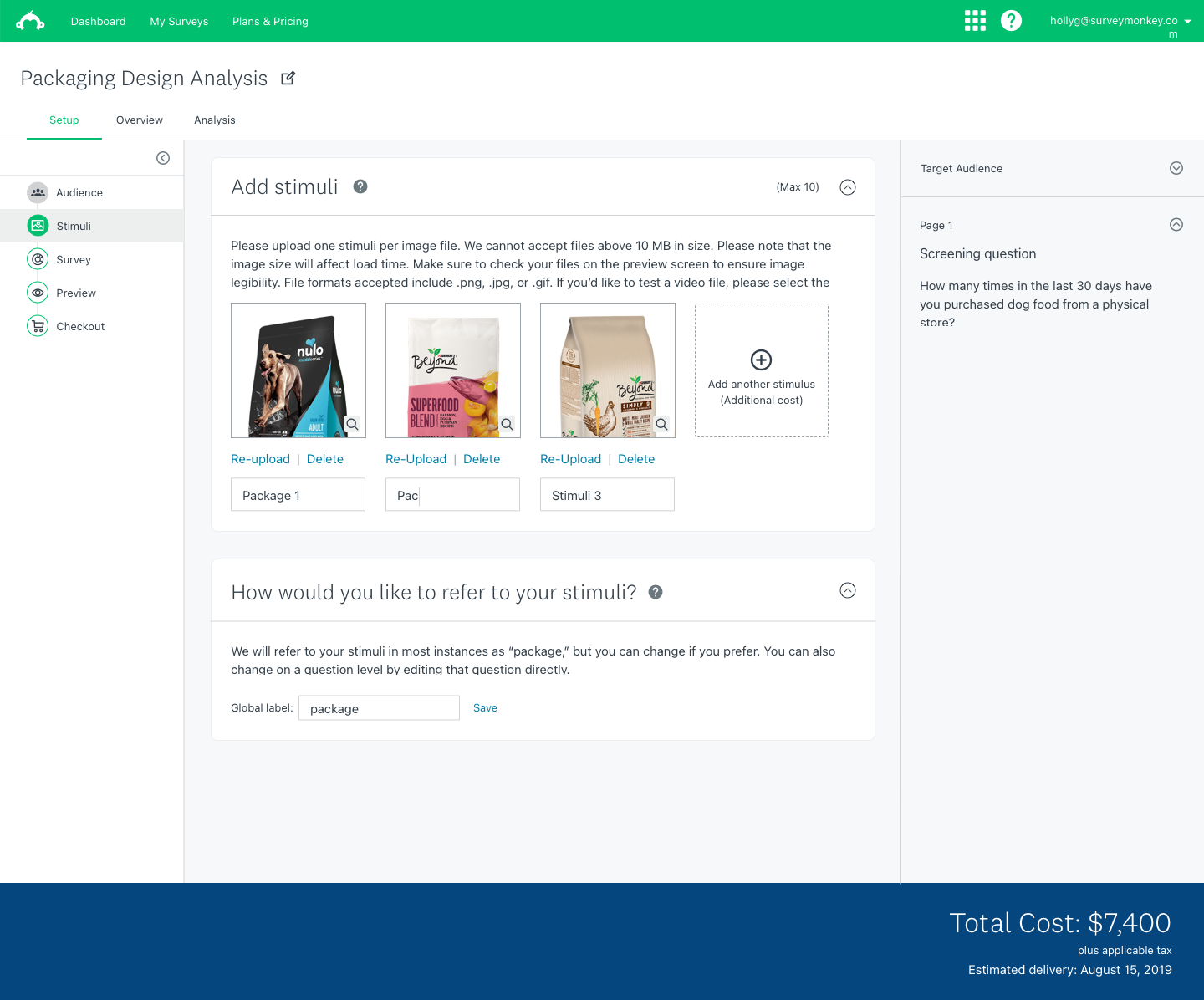

Setting up stimuli to test

I also designed the experiences for uploading stimuli and authoring the test that will go out to participants.

These flows included adding all stimuli users wanted to test for ads, product names, videos, images, and packaging design.

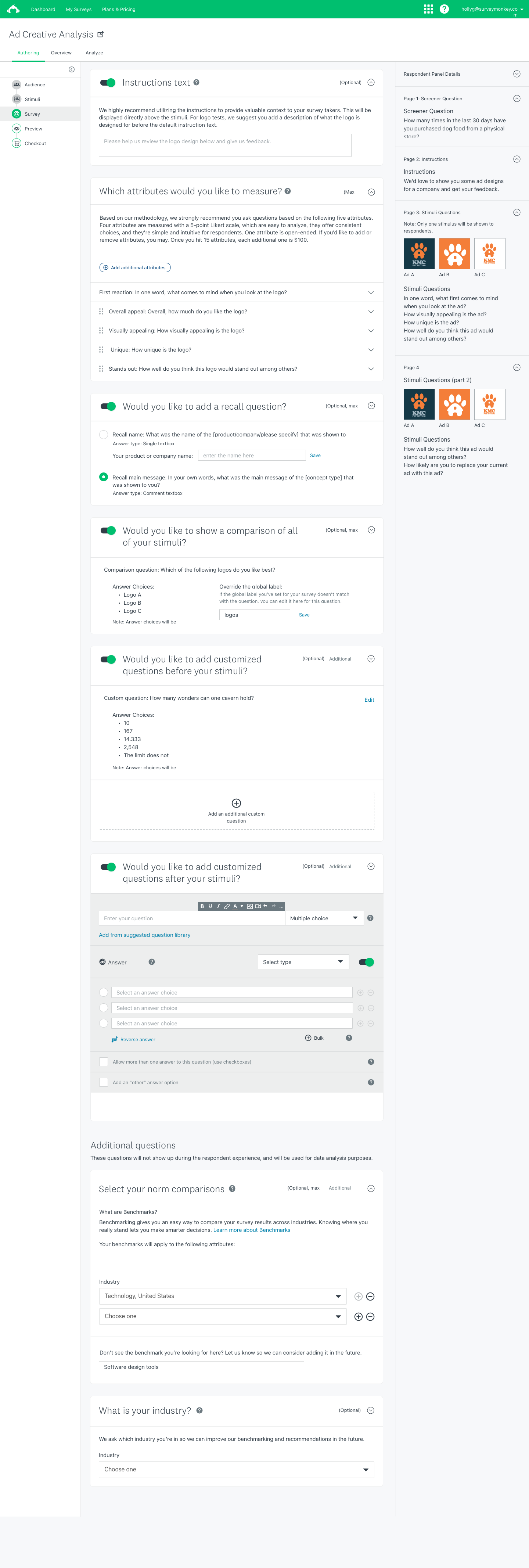

Designing the survey

During the survey step, users can generate instructions for their respondents, select the attributes they want to measure, choose if they want to show a comparison of all stimuli, and whether they want to add custom questions before or after their stimuli are shown.

They also have the options to add norm comparisons for their data, as well as identify the industry they’re in. These inputs are used during the analysis stage to deepen the insights we deliver.

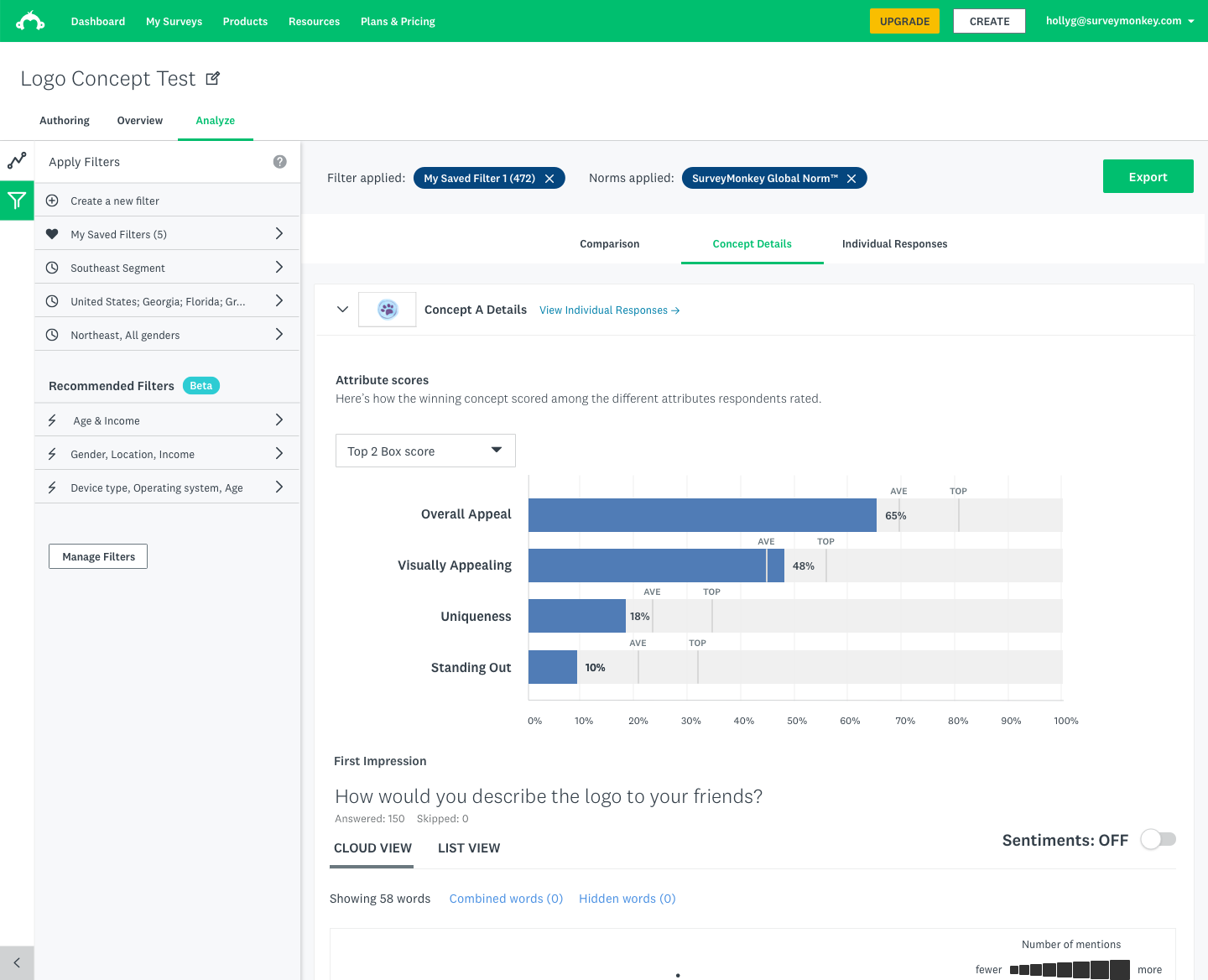

Analyzing and filtering the results

One of the key offerings of this experience was in the ability to analyze the resulting data that was collected during the tests and allow users to look for addition insights on their own.

This allowed them more control over the story they told, as well as allowed users to save complex filtering views they create and apply AI-generated ‘recommended’ filters. These filters highlighted data outliers and generated additional insights for our market researchers.

How this work was impactful

Designed and developed a new product offering for a $3.2 billion total addressable market, and launched it in 10 months

We created a framework for executing design at this scale in the future

Iterations on our audience panel demographic selection led to a 50% reduction in time it took for users to set up their demographic targeting

““My end goal is to close the loop with stakeholders and use data to tell the story in a compelling way””

Learnings

Iteration will get you there: We applied a lot of rigor to our iteration process, and the efforts paid off. We were able to evolve what started as a proposal of ten markets we might try to go after and ended up as a launched product experience for a new market. One of the key drivers of this were our weekly user testing sessions where we showed user flows and approaches to solutions as we iterated on them to get feedback. Our solutions were backed by research and allowed us to go to market with a high degree of confidence in the solutions we were presenting.

Use today’s work to build on tomorrow’s challenges: During the process of creating this new offering, the design lead and I created a product design framework that can now be utilized in the future in order to build off the learnings and solutions we had already created doing this work. That way, future efforts will not have to start from the same ‘zero’ state we did; they’ll already have some insights and guidance to start with.